Getting back into it after a few years away. I began by reading the 2022-09-12 version of The Swift Programming Language.

Regex result builder which looks nice(see swift.org/community)

A few interesting blog posts on swift.org:

Talks about the diverse range of editors beyond Xcode, and SourceKit LSP.

community has adopted it in VSCode, NeoVim, Emacs etc

You’ve already been using this on Apple platforms (e.g. compile iPad app on Mac). They’re extending this to allow you to build Linux binaries on macOS. It’s called the Static Linux SDK for Swift. “Built on Swift Package Manager support for SDK”, whatever that means.

An example: building a web service on Mac to run on Linux server. swift build on Mac runs for macOS.

Produces a fully statically-linked binary. First you do swift sdk install (…). Then you pass the --swift-sdk flag. The one they show has MUSL, which is some libc, I guess it’s statically linked.

swift-foundation first shipped in iOS and macOS last year. There are SF- proposals that they list.

Won’t make notes here since there’s a session that I’ll watch.

Something about implicitly built modules, and how explicitly built modules lead to more parallelism in builds etc. There’s a session on it.

struct File: ~Copyable with a deinit

any typesI think they’ve expanded it (was introduced last year). Come back to this when I know something about C++. Still an evolving thing since C++ is huge.

Think I understand this already; Doug’s Compiler Corner post did a good job of explaining it and how it generalises the current throws, rethrows, and non-throwing functions.

Same module error handling seems to be their main recommended use case.

Improvements to data race checking:

Sendable type from one isolation domain to another (e.g. if it’s not used in the original domain after sending)There’s a new Synchronization module which has things like an Atomic<Int> with methods like wrappingAdd(1, ordering: .relaxed), and a Mutex that has .withLock { … } which ensures mutually exclusive access

I came into this wanting to know more about the any MyProtocol meaning, and also the meaning of “primary associated type”, neither of which are explained in TSPL but which I’d heard elsewhere (possibly in this talk at some other time).

Builds on “Embrace Swift generics” talk, will watch that after

“Let’s start by learning how protocols with associated types interact with existential types.”

Animal has an associated CommodityType that they .produce(), then we have a Farm with an array var animals: [any Animal].

“… the

any Animaltype has a box(ed?) representation that has the ability to store any type of animal dynamically”

“…the strategy of using the same representation for different concrete types is called type erasure”

Now explains why animals.map { $0.produce() } returns [any Food]:

“The return type of

produce()is an associated type. When you call a method returning an associated type on an existential type, the compiler will use type erasure to determine the result type of the call. Type erasure replaces these associated types with corresponding existential types that have equivalent constraints.”

The type any Food is called the upper bound of the associated CommodityType.

Associated types appearing in the result of a function declaration are said to be in a producing position. Associated types in producing position are type erased to their upper bound.

On the other hand, associated int he parameter list of a function declaration are said to be in the consuming position (e.g. eat(_: FeedType))

“type erasure does not allow us to work with associated types in consuming position. Instead you must unbox the existential

anytype by passing it to a function that takes an opaquesometype.

We add var isHungry: Bool to Animal. We have Farm.feedAnimals() which feeds all the hungry animals. And a hungryAnimals property that initially returns [any Animal], but which we decide to optimise by returning animals.lazy.filter(\.isHungry) and hence returns LazyFilterSequence<[any Animal>]. But the client doesn’t care about this implementation detail.

So we can use an opaque result type to hide the concrete type. But if we just wrote var hungryAnimals: some Collection we’d be hiding too much type information - we wouldn’t know the collection’s Element type.

So we can use a constrained result type (new in Swift 5.7). Written by applying type arguments in angle brackets after the protocol name, e.g. some Collection<any Animal>.

This works because the Collection protocol declares that the Element associated type is a primary associated type, which is declared in angle brackets after the protocol name:

protocol Collection<Element>: Sequence {

associatedtype Element

...

}

“Often you’ll see a correspondence between the primary associated types of a protocol and the generic parameters of a concrete type conforming to this protocol” – e.g.

Array<Element>,Set<Element>

And in Swift 5.7 we have constrained existential types (i.e. can now do any Collection<Element>.)

Imagine we have:

struct Cow: Animal {

func eat(_: Hay) { ... }

}

struct Hay: AnimalFeed {

static func grow() -> Alfalfa { ... }

}

struct Alfalfa: Crop {

func harvest () -> Hay { ...

}

let cow: Cow = ...

let alfalfa = Hay.grow()

let hay = alfalfa.harvest()

cow.eat(hay)

and also similarly with Scratch, which we grow() to create Millet, which a Chicken can then eat(:_).

So, how will Farm’s feedAnimals() work? It’s going to loop through its hungryAnimals, and call feedAnimal(animal).

At that point, we need to “unbox the existential type”, which seems to mean something (?) happening to turn any Animal into some Animal.

private func feedAnimal(_ animal: some Animal)`

But what does that implementation look like?

(By the way, I didn’t see TSPL talking about using some in a producing position, but we’re seeing it here…)

So we do this:

let crop = type(of: animal).FeedType.grow()

let feed = crop.harvest()

animal.eat(feed)

(Is that type(of: animal) known at compile time?)

But apparently this doesn’t guarantee that we get back the same type of animal feed we started with.

Oh, looks like this is just getting at making sure we use a same-type requirement with a where clause:

associatedtype CropType: Crop where CropType.FeedType === Self

(I think this is nothing new, might have missed something here.)

This seems to be building up the thing from before of growing crops, which we then harvest, which we then feed to multiple animals. Starts with concrete example with a cow etc, and then shows how generics allow us to model this.

Says that this is a really common pattern:

func feed<A>(_ animal: A) where A: Animal

which can now be expressed (with identical meaning) in terms of the protocol conformance as

func feed(_ animal: some Animal)

(I think this is new in Swift 5.7.)

The specific underlying type that is substituted in to an opaque type is called the underlying type.

Local variables with opaque type must always have an initial value.

Think of any Animal as a box, formally called an existential type.

How do we call animal.eat(_:) on an any Animal? We need to unbox it – i.e. convert an instance of any Animal to some Animal by unboxing the existential type. “Opening type-erased boxes”. New in Swift 5.7. (See the full implementation, using type(of: animal in the talk I described above.)

In general, write some by default.

I think this is a concept that is more simple than structured concurrency (that is, Tasks and the language’s automatic management of their hierarchy, and the ability to manage a group of them). It’s just the idea that a function is able to suspend its execution (that is, give up control of a thread but returning control to the system instead of the caller) for the system to resume it later. This is a concept which can be informally implemented via e.g. completion handlers, but then the language is unable to enforce things that it does for synchronous code, such as that a function either returns a value or throws an error. Notice also that this has nothing to do with actors, which although integrated with the concurrency system, are simply a means to protect mutable state.

Notice that SE-0296 – Async/await says:

Because only async code can call other async code, this proposal provides no way to initiate asynchronous code. This is intentional: all asynchronous code runs within the context of a “task”, a notion which is defined in the Structured Concurrency proposal.

Read-only can be async too, e.g. await maybeImage?.thumbnail. They need an explicit getter so they can be marked get async. Initializers can be async too.

await also works in for loops for iterating over async sequences. See the “Meet AsyncSequence” section.

When a function resumes, there’s no guarantee it’ll do so on the same thread as before.

XCTest supports async test functions out of the box — removing the need for expectations.

(They don’t address what to do if you’d like a timeout.)

How do you call async code in a context that doesn’t support concurrency? This is where a taste of Task comes in.

Task {

// something

}}

The compiler provides async alternatives to Objective-C methods that take completion handlers.

Furthermore, given delegate or data source methods that receive a completion handler (which are used to inform the framework when some work has completed), the compiler creates an async alternative.

We recommend that

asyncfunctions omit leading words likeget, that communicate when the results of a call are not directly returned.

To use a callback method inside an async function? Use withCheckedThrowingContinuation. The continuation has resume(throwing:) and resume(returning:) methods.

A continuation must be resumed exactly once – < 1 results in a warning, > 1 results in a fatal error.

I thought perhaps it would be interesting to look at this next instead of going on to structured concurrency and actors, since, just like async/await, it’s just another extension of existing synchronous programming ideas.

Unlocks the for (try) await syntax.

AsyncSequence has all the expected Sequence functionality like map, reduce, dropFirst().

AsyncSequencewill suspend on each element, and resume when the underlying iterator produces a value or throws.

Unlike Sequence, they can throw an error.

I don’t understand what happens if multiple people share an async sequence. Need a deeper dive into the documentation on this one.

Bear in mind that it’s quite common for an async sequence not to terminate. (You might, for example, want to wrap the iteration in a separate Task in that case. That way, you can also externally cancel the iteration by cancelling the task. Presumably, iteration has no special handling of cancellation and you still need to cooperate and check for it.)

AsyncSequence

For example, you could await center.notifications(named: …).first { … }.

FileHandle adds bytes: AsyncBytes

AsyncSequence that converts an AsyncSequence of bytes into one of linesURL adds convenience resourceBytes: AsyncBytes and lines: AsyncLineSequence<AsyncBytes> async sequences.URLSession has instance method func bytes(for: URLRequest) async throws -> (AsyncBytes, URLResponse)

NotificationCenter adds func notifications(named: Notification.Name, object: AnyObject) -> Notifications

AsyncSequence?Let’s see how to adapt existing patterns such as (callbacks / delegates) that are executed multiple times, which don’t require any response back.

Our bridge to the AsyncSequence world is AsyncStream, which vends a continuation with a yield function (and also an onTermination which they just say “handles termination and cleanup” – one to understand better)

let quakes = AsyncStream(Quake.self) { continuation in

let monitor = QuakeMonitor()

monitor.quakeHandler = { quake in

continuation.yield(quake)

}

continuation.onTermination = { _ in

monitor.stopMonitoring()

}

monitor.startMonitoring()

}

So, something that’s not clear to me is what the consequences are of the fact that simply creating this stream starts some work happening. Is that different to the way things work in Combine / Rx? I don’t know enough about those models either to have much to say on this yet though.

Sounds like there’s other stuff to understand about AsyncSequence, like how it handles buffering (and what that even means).

There’s also AsyncThrowingStream if you want to be able to throw errors.

I’m not sure what the correct language is that corresponds to concepts like “publish an element”, if I wanted to document an API.

I think — from seeing one of the Swift Evolution proposals — that async sequences are bound to some concept of time and of representing it. But that wasn’t mentioned here. Another one to look into.

Sounds like this is the package that introduces interaction with Clock.

Combining async sequences into a single output

Zip

Combines values into tuples. Rethrows errors. Each side is waited concurrently (i.e. one doesn’t block the other)

Merge

Combine multiple AsyncSequences into one AsyncSequence

If any of the iterations produces an error, the other iterations are cancelled.

There are some APIs for leveraging the new Clock, Instant and Duration in Swift 5.7. Let’s first understand what these types are.

Clock: Protocol for defining time. Defines a way to wake up after a given instant and a concept of now. Two of the most common ones are SuspendingClock (stops when machine sleeps) and ContinuousClock (always runs). We have clock.sleep(until:) and clock.measure { … }.Debounce – debounce(for: .milliseconds(300))

Awaits a quiescence period to produce events. Rethrows failures immediately. Used for example for rate limiting searches when user enters input

Chunks – groups elements into collections by count, time, or content

e.g. let batches = outboundMessages.chunked(by: .repeating(every: .milliseonds(500))

Used e.g. for batching requests to the server

Initialize Dictionary, Set or Array from (known finite) async sequences

Structured programming is an idea we take for granted these days – it makes control flow more uniform. For example, it ties together control flow and variable lifetime.

Asynchronous code with completion handlers is unstructured. (I think that some of this we’ve already seen in the async/await talk – unable to throw errors, unable to use loops).

Let’s continue beyond what we saw in the async/await talk. We’ve seen how to use async/await to generate thumbnails sequentially in a loop. But want if we want to do it concurrently, so that multiple downloads can happen in parallel?

You can create additional tasks to add concurrency to a program. A task provides a fresh execution context to run asynchronous code. Each task runs concurrently with respect to other execution contexts.

They’ll be automatically scheduled to run in parallel when safe and efficient to do so.

Calling an async function does not create a task for the call. You create tasks explicitly.

Tasks form part of a hierarchy called a task tree.

A parent task can only finish its work if all of its child tasks have finished. This guarantee is fundamental to structured concurrency. It prevents you from accidentally leaking child tasks.

When a task is cancelled, all subtasks that are descendents of that task will be automatically cancelled too.

Tasks are not stopped immediately when cancelled. They must check if they have been cancelled.

You can check for cancellation from anywhere, async or not. Design your code with cancellation in mind.

You can use try Task.checkCancellation(), which throws an error if the task is cancelled. You can also use if Task.isCancelled.

You might wish to return a partial result if a task is cancelled. If doing so, you must document this.

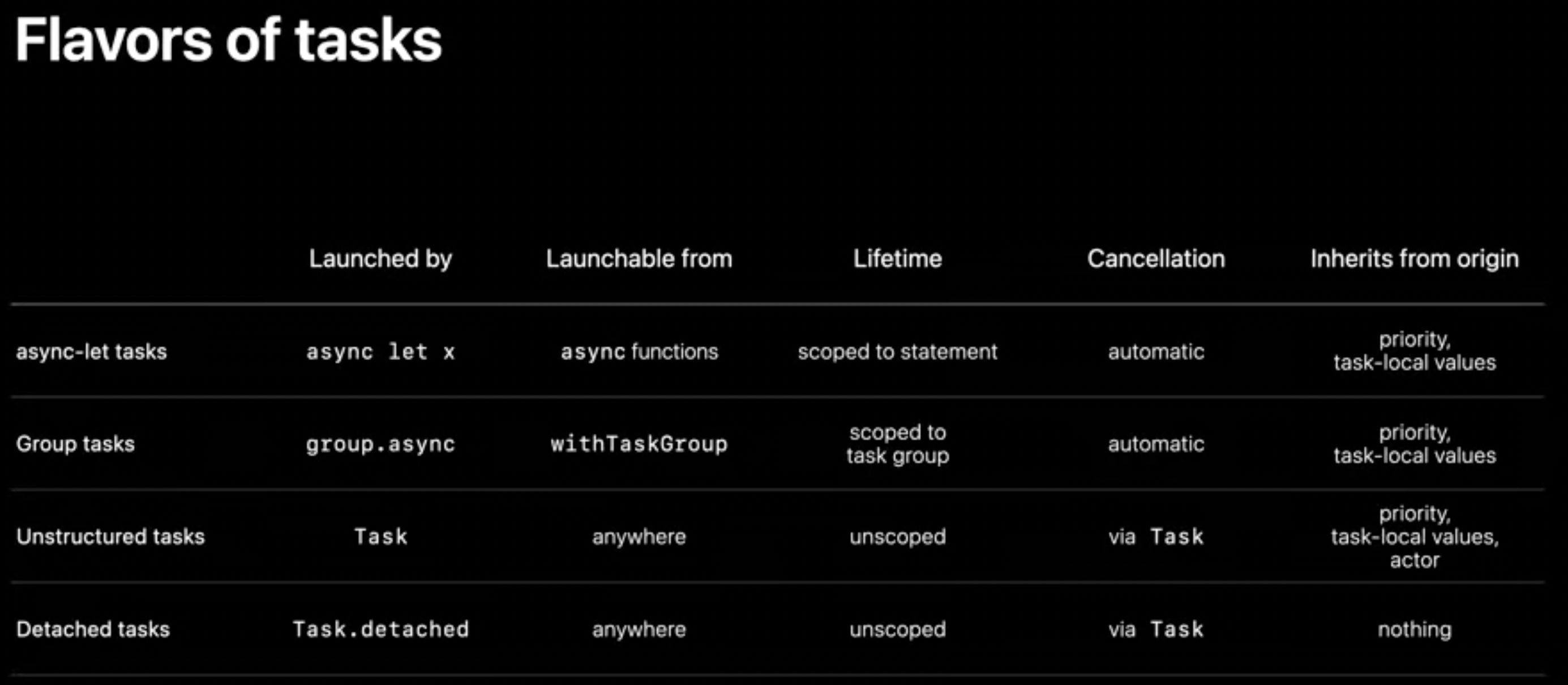

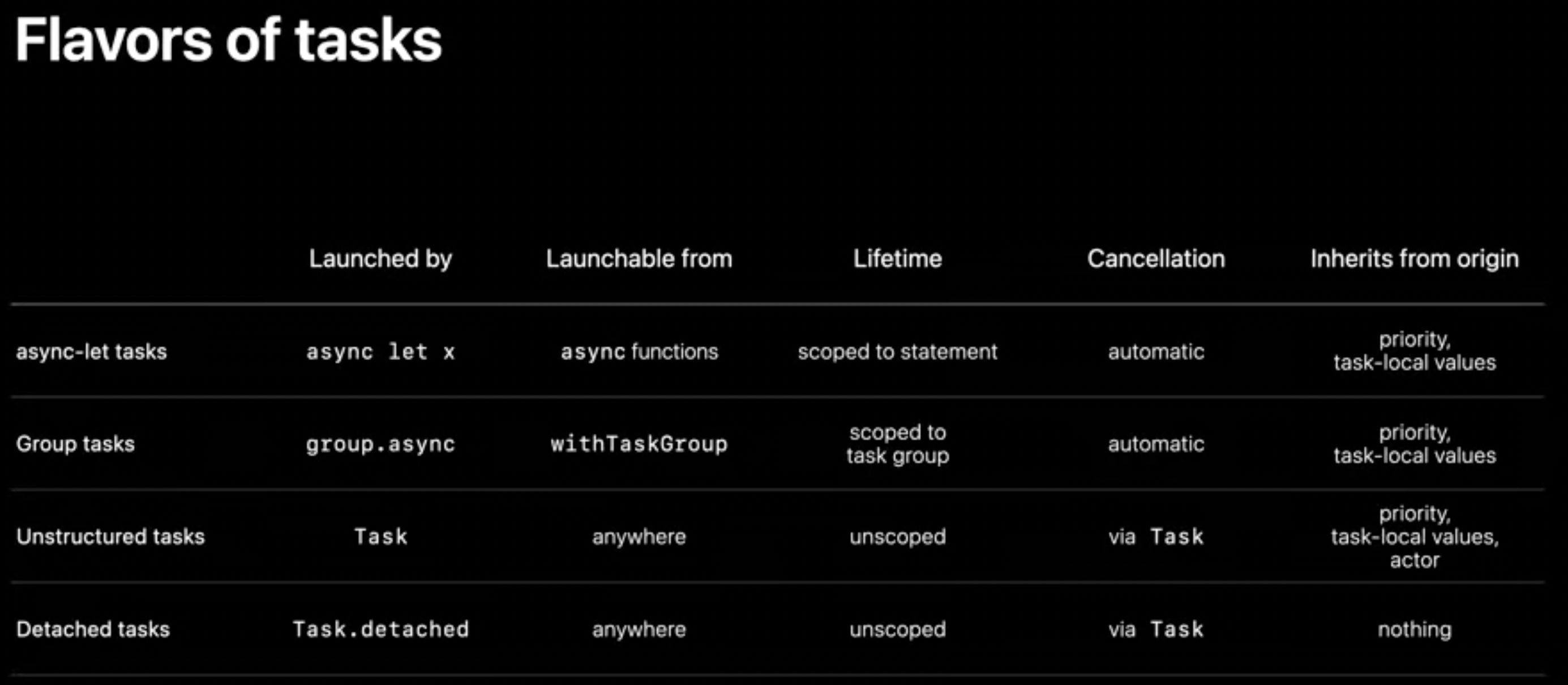

They fall into two categories:

Here’s a summary from the end of the talk:

async let result = URLSession.shared.data(…) – this creates a new task, a child of the task that created it.

You then need to try [await] result.

If one async let task fails before another one is awaited, Swift will automatically mark the un-awaited task as cancelled, and then await for it to finish, before ending the function.

Provides a dynamic amount of concurrency. Use withThrowingTaskGroup.

Tasks added to a group cannot outlive the scope of the block in which the group is defined.

You add tasks to a group using group.async. Once added to a group, child tasks are executed immediately and in any order.

A task group will await all of its child tasks when it goes out of scope. If a child task fails, the other tasks in the groups will be cancelled.

Not all tasks fit a structured pattern:

async contextsWe use the Task { … } initializer. “Swift will schedule the task to run on the main actor as the originating scope” (?)

These tasks “inherit actor isolation and priority of the the origin context” (?), but the lifetime is not confined to any scope. We must manually handle the things that structured concurrency would have handled automatically — cancellation and awaiting the result.

In their example, they show how to kick off a task in response to a UICollectionView telling us it’s going to display a cell. They show that we can access the containing class’s mutable state (a dictionary) inside the task body without a compiler error, because “the delegate class is bound to the main actor (@MainActor), and the new task inherits that, so they will never run together in parallel”. And they then cancel the task when the cell goes out of view (another delegate callback).

Also unstructured, but they don’t inherit anything from their originating context. In their example, they use it to write some thumbnails to a file. Use Task.detached { … }. You can pass e.g. (priority: .background).

For example, if you try to modify a dictionary inside the body of a task group, you’ll get a compiler error “mutation of captured var ‘foo’ in concurrently-executing code”.

Task creation takes a closure of a new type @Sendable. Its body is not allowed to capture mutable variables. They should only capture value types, actors, or classes that implement their own synchronization.

See the session “Protect mutable state with Swift actors”.

In the particular example in this talk, they instead make each task return a value, and then use a for try await (id, thumbnail) in group (the group conforms to AsyncSequence) in order to store the values in a dictionary — this loop runs sequentially.

struct Counter {

// (...)

mutating func increment() -> Int {

// (...)

}

}

var counter = Counter()

Task.detached { counter.increment() }

This gives the compiler error “Mutation of captured var ‘counter’ in concurrently-executing code”.

Actors provide synchronization for shared mutable state. They isolate their state from the rest of the program. All access to that state goes through the actor, which ensures mutually-exclusive access to its state.

They are a new type of reference type in Swift.

External interactions with an actor must be performed asynchronously (i.e. with await).

Calls within an actor are synchronous, and synchronous code always runs uninterrupted.

OK, synchronous code runs uninterrupted, but actors often interact with each other, or with other asynchronous code in the system.

I do not understand the example they are showing here. They have two separate tasks accessing the actor’s shared image cache separately. It has something to do with the fact that there’s a suspension point in the middle where they fetch an image. “We don’t have any low-level data races, but because we carried assumptions about state across an await, we ended up with a potential bug.” But why did it allow two tasks to be executing the same method on the actor simultaneously?

“Actor reentrancy prevents deadlocks and guarantees forward progress, but it requires you to check your assumptions across each await.”

To design well for reentrancy:

await.”await (e.g. about global state, clocks, …)e.g. making an actor conform to Equatable.

extension LibraryAccount: Equatable {

static func ==(lhs: LibraryAccount, rhs: LibraryAccount) -> Bool {

lhs.idNumber == rhs.idNumber

}

}

Although it’s a static function performing this check, it’s OK, because it’s only accessing immutable state of the actor.

But, now let’s consider trying to implement Hashable, which requires a synchronous instance method hash(into: hasher: inout Hasher). But that gives the error “actor-isolated method hash(into:) cannot satisfy synchronous requirement”. To fix this, we add the nonisolated keyword to the method. This means that the method will be treated as being outside the actor, even though it syntactially is inside the actor.

“Like functions, a closure might be actor isolated, or it might be nonisolated.”

This means that, for example, we can have an actor method that calls someArray.reduce { someOtherActorMethod() }, because we know it’s going to execute synchronously. The closure is isolated to the actor.

But, if, for example, we instead of someArray.reduce we use Task.detached, we need to use await someOtherActorMethod() inside the closure. The closure is not isolated to the actor.

(I don’t know if these concepts of “(not) isolated to the actor” are some sort of attribute of the type or if it’s something more contextual than that)

What if we passed a instance of a mutable class into an actor, and the actor then returned this value to something outside the actor? Then we’d have a handle into mutable state inside the actor.

Types that are safe to use concurrently are Sendable types. They can be shared across actors.

Many different kinds of types are Sendable:

@Sendable function types (we’ll return to these)Sendable describes a common but not universal property of types. Swift will eventually prevent non-Sendable types from being shared (it will become an error to pass a non-Sendable type across actor boundaries).

To declare a type as Sendable, add a conformance to that property. Swift will then check that this makes sense (e.g. by checking the properties). Similar to other protocols, we can propagate Sendable by adding a conditional conformance.

@Sendable functionsIt places the following restrictions on closures:

Sendable type (to make sure that the closure cannot be used to move non-Sendable types across actor boundariesWe’ve been relying on this attribute already, e.g.

static func detached(operation: @Sendable () async -> Success) -> Task<Success, Never>

So, the “Mutation of captured var ‘counter’ in concurrently-executing code” error we saw at the beginning comes before detached expects a @Sendable closure, but it’s trying to capture a mutable variable.

There’s a special actor called the main actor. (**I don’t really understand what the word “actor” means when used here, and how it’s related to the concept of actor — i.e. types declared with the actor keyword — we’ve seen so far; are they the same thing or do they just have the same general principle of synchronisation? e.g. what would be the main actor’s mutable shared state and is access to it enforced?)

“Interacting with the main thread is a whole lot like interacting with an actor — if you know you’re already running on the main thread, you can safely access and update your UI state. If you aren’t running on the main thread, you need to interact with it asynchronously. This is exactly how actors work”.

There’s a special actor to represent the main thread, called the main actor.

It differs from a normal actor in two important ways:

@MainActor attribute to say that it must be executed on the main actor. If you call it from outside the main actor, you need to await.Types can also be placed on the main actor (with @MainActor) — this implies that all methods and properties of the type are MainActor. Individual members can be opted out with nonisolated.

How does this interact with framework types? Are they already marked? Their example shows @MainActor class MyViewController: UIViewController.

A bunch of stuff about boats and pineapples and chickens. Goes over stuff from last year’s actor talk.

Sendable constraints in Task APIsWherever tasks can exchange data, there is a Sendable constraint. For example struct Task<Success: Sendable, Failure: Error> { ... }.

Sendable inferenceInferred (e.g. for structs with Sendable properties) by the compiler for non-public types.

Sendable checking for classesClasses can only be made Sendable under very narrow circumstances — e.g. when a final class only has immutable storage.

You can implement reference types that perform their own internal synchronisation. They’re conceptually Sendable but the compiler has no way to reason about it. You can mark the types as @unchecked Sendable in this case.

(I don’t really understand how to use thread-related synchronisation mechanisms to implement Sendable — does that not require some understanding of how Swift’s runtime uses threads to implement concurrency?)

Only one task can execute on an actor at a time — is this something that might help me understand the re-entrancy thing that confused me in last year’s actor’s session? When an actor method suspends, is the calling task no longer considered to be executing on the actor?

“Actor isolation is determined by the context you’re in”.

@Sendable stay on the actor and are actor isolated when they’re in an actor isolated contextTask initializer inherits actor isolation from its context (the created task will be scheduled on the same actor as it was initiated from) What — I don’t get this; what does it even mean to be “scheduled on an actor”? Their example shows some mutable actor state being accesesed inside Task { ... }’s closure.await. This closure is called non-isolated code.Non-isolated code is code that does not run on any actor at all. You can explicitly make an actor’s method nonisolated (we know this bit already).

“Non-isolated async code executes on the global cooperative pool” (what?)

The phrase “isolation domain” is used (?)

@MainActor attribute to a closureTask { @MainActor in

...

}

“The goal of the Swift concurrency model is to eliminate data races. What that really means is that it eliminates low-level data races, which involve data corruption. You still need to reason about atomicity at a higher level”.

As we said, actors run one task at a time. But, when you stop running on an actor, it can run other tasks. This ensures that the program makes progress, eliminating the potential for deadlocks. This requires you to consider your actor’s invariants carefully around await statements. Otherwise you can end up with a high-level data race, where the program is in an unexpected state even though no data is corrupted.

But the example they show here is an async function that fetches an island’s food asynchronously, then adds something to it, then sets the food. (This is different to the reentrancy thing we saw before, right?)

(First of all, the Swift compiler will reject an attempt to outright modify an actor-isolated property from outside a non-isolated context.)

Think in terms of synchronous , transactional operations. Keep async actor operations simple. Take care that your actor is in a good state at each await operation.

Programs often rely in handling events in a consistent order — e.g. user input, messages from a server. Effects of each event should appear in the order they happened.

Actors are not the tool for this. They’re not strictly first-in, first-out, and execute the highest-priority work first.

Tools for ordering:

AsyncStream delivers elements in order@preconcurrency import

Silence Sendable warnings for types from an imported module, by using @preconcurrency import FarmAnimals.

This will check things like sending a non-Sendable closure even to a dispatch queue, for example.

TODO: I don’t understand what “blocking” means in the rest of this talk. Does it mean blocking on a system call? I assume it doesn’t just mean a thread performing computations. If it does mean system calls, how does Swift avoid blocking on them? (Also, it also means blocking on another task)

Let’s start by thinking about how it currently works in GCD.

When work is enqueued on a GCD queue, the system brings up a thread to service it — and on a concurrent queue it will do so up to the number of cores. But if a thread blocks and there is more work to be done on the concurrent queue, GCD brings up more threads to drain the remaining work items — to ensure that each core continues to have a thread that executes work at any time, and also to potentially help unblock the blocked thread.

This can lead to lots of threads (I’m being vague, they have a proper explained example), known as thread explosion (see previous WWDC talks about risks e.g. deadlock, memory overhead (stack etc), context switching. As blocked threads become available again, the scheduler has to timeshare the threads (which for reasons I didn’t fully follow is not a good thing in excess).

Now let’s think about Swift concurrency, which is “built with performance in mind”.

In Swift, there are no thread context switches, and no blocked threads. Instead we have lightweight objects known as continuations to track the progress of work. We now only pay the cost of a function call instead.

Swift only creates as many threads as there are CPU cores.

Swift’s concurrency model and semantics have been designed with the guarantee that threads will not block.

Relevant language features:

await and non-blocking of threadsThey then go into some details of how the call stack works with await (local variables stored in async frame on heap, current stack frame replaced)

Runtime contract: threads are always able to make forward progress.

Integrated OS support: new cooperative thread pool, default executor for Swift, width limited to the number of CPU cores.

await and atomicity — no guarantee that the thread which executed the code before the await will execute the continuation as well

await

await

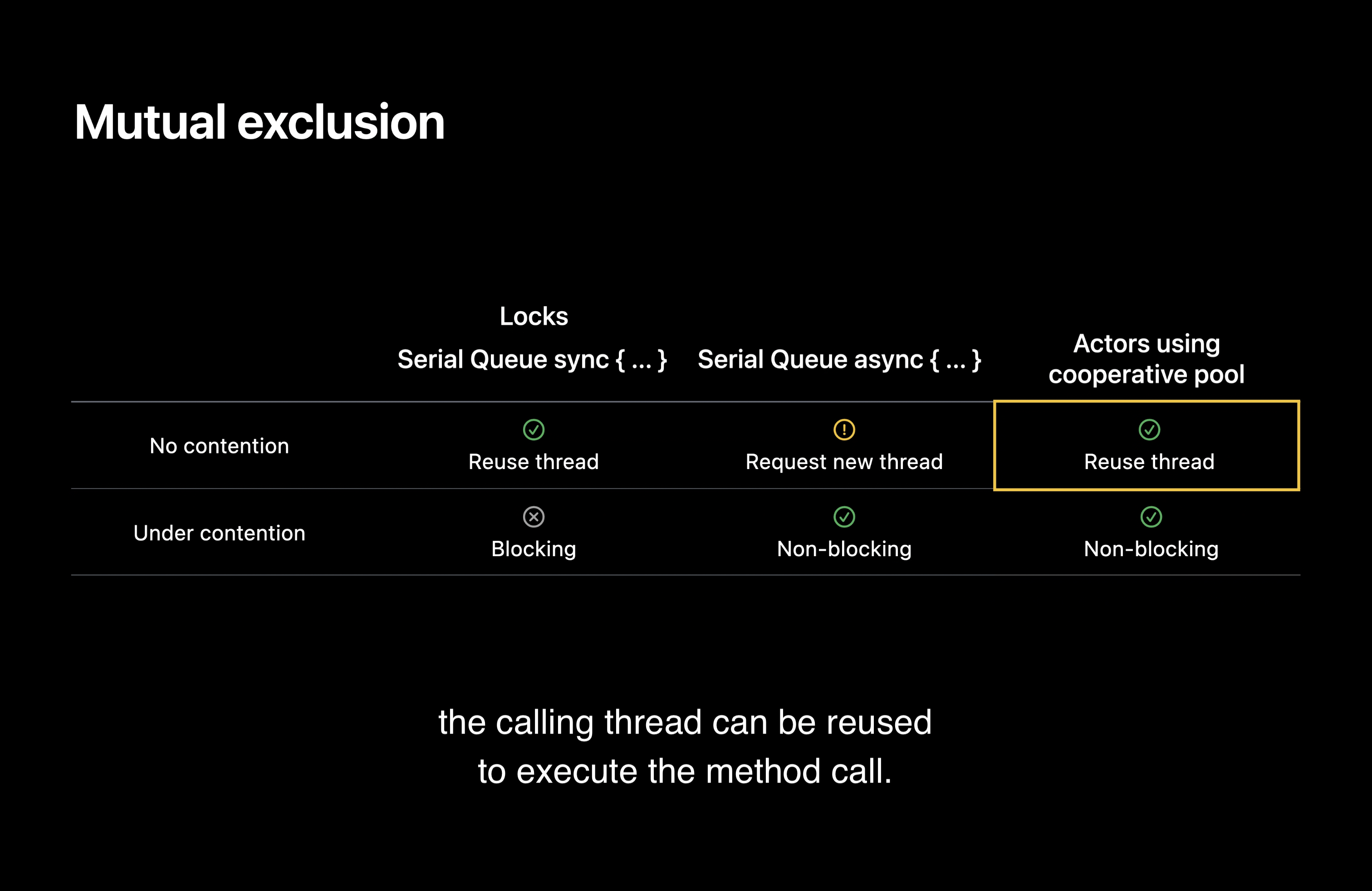

await, actors, task groups. Primitives like os_unfair_lock and NSLock in synchronous code are safe for locking, in a tight well-known critical section “because the thread holding the lock is always able to make forward progress towards releasing the lock; as such, while the primitive might block the thread for a short period of time under contention, it does not violate the runtime contract of forward progress” (? I think the key part here is that it’s only whilst the lock is under contention). But there’s no compiler support to help you use locks correctly. On the other hand, semaphores and condition variables (DispatchSemaphore, pthread_cond, NSCondition, pthread_rw_lock etc) are unsafe to use with Swift concurrency, because they “hide dependency information from the Swift runtime but introduce a dependency in execution in your code” meaning that the compiler cannot make the correct scheduling decisions. (I’m not sufficiently familiar with these two different types of concurrency primitives to understand why locks are OK and semaphores not; I had always just thought of a semaphore as some sort of more snazzy lock). In particular, don’t use these primitives “to wait across task boundaries” (their example creates a semaphore, then creates a Task { await asyncUpdateDatabase(); semaphore.signal() }, then outside that block calls semaphore.wait(), because “such a code pattern means that a thread can block indefinitely against the semaphore until another thread is able to unblock it. This violates the runtime contract of forward progress for threads.” “To help you identify unsafe uses of such unsafe primitives in your codebase, we recommend testing your apps with the LIBDISPATCH_COOPERATIVE_POOL_STRICT=1, which runs the app in a modified debug runtime which enforces the invariant of forward progress.” (I have no idea what it means when it says that it “enforces” it or how it does it.) “When running your apps with this environment variable, if you see a thread from the cooperative thread pool that appears to be hung, it indicates the use of an unsafe blocking primitive” (their screenshot of the debugger shows a thread on queue com.apple.root.user-initiated.qos-cooperative that’s waiting in semaphore_wait_trap).Now we’ll learn about what synchronisation primitives can be used with Swift Concurrency. (Not sure if the answer is just going to be “actors”…)

sync { … }: when there’s no contention, thread is reused; when there’s contention the thread is blocked, which triggers the earlier-described thread explosionasync (not sure why); “the primary benefit of dispatch async is that it is nonblocking, so even under contention it will not lead to thread explosion”, but, even when there is no contention, “Dispatch needs to request a new thread to do the async work, while the calling thread continues to do something else. Hence, frequent use of dispatch async can leave to excess thread wakeups and context switches.”

Now, actors apparently “combine the best of both worlds by taking advantage of the cooperative thread pool for efficient scheduling. When you call a method on an actor that is not running, the calling thread can be reused to execute the method call. In the case where the called actor is already running, the calling thread can suspend the function it is executing and pick up other work.”

Now they talk about “actor hopping” and how in their example app (which I don’t remember) e.g. when the sports feed actor wants to do something on the database actor, and the database actor is not being used, the thread can directly hop from the sports feed actor to the database actor. Now say that some other actor (weather feed) wants to use the database actor whilst it’s processing the sports feed work item. Well, the weather feed work will be suspended and its thread freed up to do other work.

(Is the idea of an actor being suspended, which they alluded to here, a shorthand for something else; is it a term used elsewhere?)

Now it’s going to talk about why actor reentrancy is important. Their example, in GCD is:

fetchLatestForDisplay()

backUpToiCloud()

Since dispatch queues are strictly FIFO, this means that you might be in a situation where loads of low priority work items need to be executed before something high priority further down the queue can be executed. This is called priority inversion.

Actors don’t have this FIFO property and can boost the priority of something in the queue. But there’s something else I don’t yet understand.

Now they talk some more about reentrancy and I think my brain is quite saturated. (this is around 34:50). So will have to come back to this. Hopefully I can get away with not having to understand this for now; I guess for the most part things just work?

MainActor

Now they’re talking about this and how it’s different to other actors (I think a key point being that switching to this actor necessarily requires switching thread). They show some code that apparently keeps hopping between the main thread and another actor, and they say that this can have high overhead. It says that in a case like this you might want to consider making your code batch the work destined for the main thread to reduce the number of switches.

I’m pretty ignorant about SPM; haven’t had to use it much until now (2024-07-20). A cobbled-together history from looking at WWDC sessions:

libSwiftPM)A Swift script that can perform actions on a Swift package. Uses a special API provided for this purpose.

They’re themselves implemented as Swift packages. A plugin can use more than one source file. A Swift package can define more than one plugin. Specialized packages can be private to the package that provides it. But also can be made public by defining it as a package product.

There are two kinds:

Accessible from the right-click menu on Package.swift in Xcode. It then asks you which of the targets you wish to pass to the plugin. You can choose to invoke on the whole package. You can also pass custom arguments to the plugin.

You can also use the swift package plugin subcommand. swift package plugin --list to list the action verbs, and swift package <verb> to invoke plugin.

Each plugin runs as a separate process (in a sandbox that prevents network access and only has limited file system write access, e.g. build outputs directory). They have access to:

Plugins can extend sandbox, ask for permission to also modify files in the package source directory.

Plugins can emit warnings and errors.

The PackagePlugin provides the APIs.

@main

struct MyPlugin: CommandPlugin {

// Entry points specific to package capability

}

createBuildCommands(context: PluginContext, target: Target) throws -> [Command]

To specify which build tool plugins to apply to a package target, there’s a new plugins parameter available on targets in Package.swift.

I wonder if there’s a way to preview the generated code?

Let’s use some popular packages to build a service. They are going to build an app that lists events and lets you create one.

Now we’ll look at database drivers. We’ll use Postgres today; we’ll use the PostgresNIO package maintained by Vapor and Apple.

PostgresClient to query the databasequery method returns an AsyncSequence of rowsawait postgresClient.run() which occupies the current task (seems a bit like running a run loop), so they create a task group to allow them to run that and the Vapor application concurrentlyPostgresQuery has string interpolation magic that actually translates a string literal into a query with bindings to avoid injection attacksObservability

Logger from swift-log

Counter from swift-metrics: Counter("list.events.counter").increment()

withSpan from swift-distributed-tracing (not sure how it works, apparently it’s explained in last year’s “Beyond the basics of structured concurrency”)postgresClient.query and accessing its server-sent error message (error.serverInfo?[.message])Finding packages

Same person who gave the WWDC 2024 Swift on Server talk I wrote about above.

I watched this talk and didn’t fully absorb it; here are a few things I scribbed down whilst watching it. One to return to, armed with more concurrency knowledge.

run methodswith-style methods and how closures inherit isolation, and something about not being to convert to MainActor-isolatedinout sending

with-style methodscancelOnGracefulShutdown — don’t you lose logs?This is by John McCall, who is very active in the Swift forums. I watched it once and don’t have much to say about it yet, because it’s pretty dense. He also has a Mastodon thread where he goes into more detail about things he had to leave out or simplify.

I think that perhaps some of this stuff dovetails with the non-copyable types thing; it talks a lot about things being copied and what that means.

One to return to.